The hitchhiker's guide to computer vision

Are you tired of this towardsdatascience/medium tutorials and posts about deep learning? Don’t panic. © Take another one.

Are you tired of this towardsdatascience/medium tutorials and posts about deep learning? Don’t panic. © Take another one.

So, as I said, there are so many educational resources around the deep learning area that at some point I found myself lost in all that mess. There are tons of towardsdatascience/medium tutorials on how to use something, and most of them are on a beginner’s level (although I enjoined some of the articles).

I felt that there should be something higher than “piece of cake” or “bring it on” levels. Like “hardcore” or even “nightmare”. In the end, I want resources that will bring value, and not something I already know. I don’t need detailed tutorials (well, usually), instead, I want to see directions. Some reference points from where I can start my own path. And it may be the case, that I can write such an article for others, who feel the same way.

So I came to the idea of a short “how-to-and-how-not-to” post on the computer vision area (mostly from DL perspective). Some links, tips, lifehacks. Hope it will create adding value for someone. And hope it won’t be yet another boring tutorial.

Finally, a small disclaimer: these are my personal beliefs and feelings, they are not necessarily true. Moreover, I feel that some of the points are not optimal solutions, and I would be happy if someone will propose a better option.

Enjoy!

Now, let’s start with the tools and infrastructure, for your CV research.

In general, several areas should be presented in your projects. There are a huge number of options in each area, and you can easily get lost. I believe that you should just choose one sample from each area and stick to it.

These areas are:

Starting from simple. Language — without doubts, python. Others are way below. (Sorry R and Matlab users).

IDE — what IDE you will use? I personally use PyCharm, but know a lot of people who use VS Code. I know that there are Jupyter notebooks, google colabs. Deepnote is quite a good tool as well. In fact, they all are nice, but not for proper R&D nowadays. Don’t get me wrong, I love Jupyter notebook and use it a lot, but let’s be honest, it is not IDE, but rather a research prototyping environment. A combination of jupyter notebooks and proper IDE will boost your projects a lot.

Frameworks — today there are only two main players in this area: Pytorch and Tensorflow. There are hundreds of comparison articles, and both frameworks are great (some fresh discussion on Reddit). Again, just choose what you like the most and never regret it. My choice is Pytorch — like it as hell. There also wrappers of these frameworks, I use Pytorch Lightning — amazing stuff. There is a good ecosystem around Pytroch and sometimes I found something interesting and new there. Probably there is a similar thing for Tensorflow (sorry, too lazy to check)

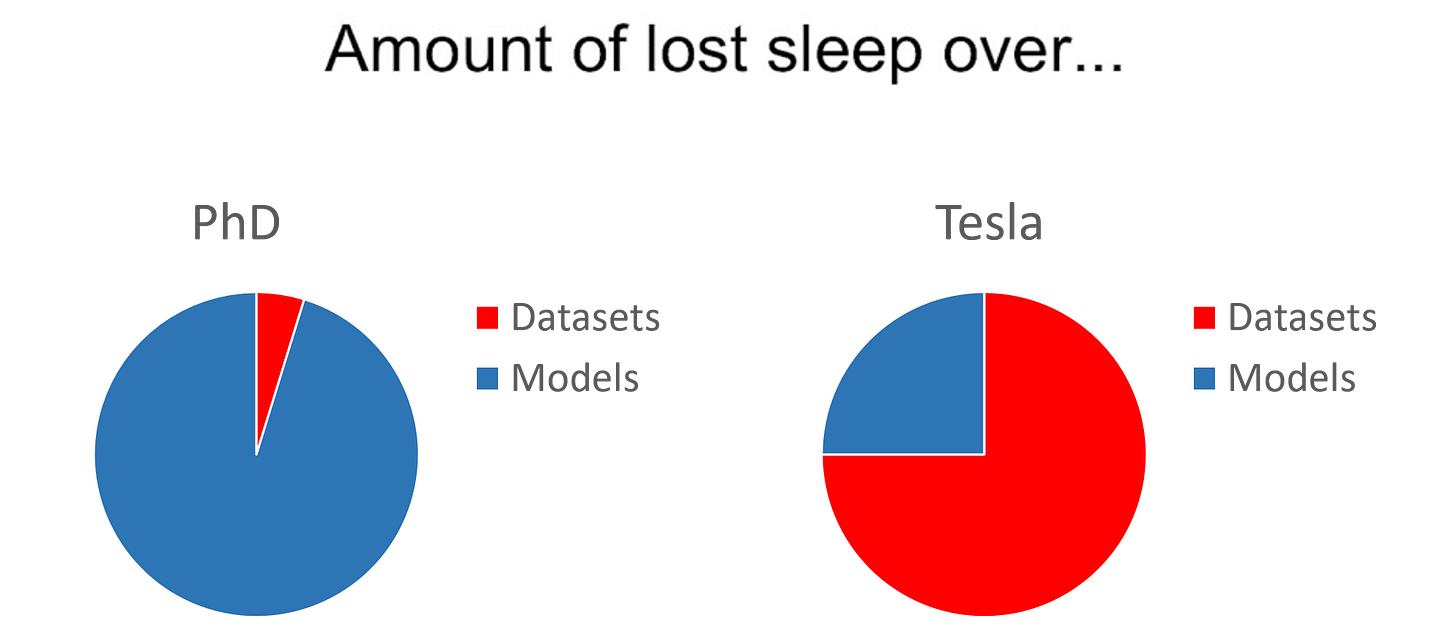

Data management — very important and undeservedly ignored by the majority of people. There are amazing speeches of Andrej Karpathy about data importance, very inspiring — highly recommend (this and this — they are about a lot of things, tbh, but also about the importance of data). This is one of his slides that tells a lot.

I use DVC for data version control — we also created a data registry in our team. We keep track of all the changes in original raw data there (adding new data, reannotations, changes). Enjoying it a lot. Nice developing is happening in Activeloop: Hub is interesting solution and worth attention. Important thing: metadata is highly valuable as well. Never underestimate its importance. Data labeling could be a separate chapter, but I decided to put it here. Again, tons of image annotation tools on the market: choose the one that fits your need. We use supervisely at the moment, super convenient in distributed labeling.

In general, be prepared to spend a lot of time on data: structuring, cleaning, labeling, visualizations, etc. — all that is, in my opinion, way more important than actual straight ML/DL stuff. This is just something you should deal with. So keep calm, and spent time on data.

More about data management is here.MLOps — something that everyone starts to talk about. To simplify: this is DevOps in the ML area. Probably, DVC can be related here as well. MLops is everything you will need to create a nice infrastructure for your ML projects. That includes experiments tracking, comparing, reproduction, models saving/tracking, CI/CD stuff that you can use. The market is full of free and paid packages. We use MLFlow and like it a lot. We have an MLFlow server with a minio backend, we store all our team experiments there. At the same time, we have a Model registry, that helps a lot in production. It gives us version control over the models and an easy way to load them.

MLflow is not the only solution, of course. Take a look at others: W & B, comet, neptune.

Also, nice free book: Introducing MLOps from O’Reilly & Dataiku

Plus some combination of this and previous bullet points: MLOps: From Model-centric to Data-centric AI lecture from Andrew Ng.

Let’s go to the methods and algorithms.

CV is the most advanced field in DL (sorry NLP enthusiasts) and that causes the large variety of cool models/methods. On the other hand, each freaking day there is something new. Still, there are some classical constants that barely change. (in fact, if you are not into fundamental research, you can just choose some proven techniques and they will work. Well, most likely.)

There are always some SOTA back-bone architectures, and they are quite constant. Resnets are a good baseline, se_resnexts are usually way better. Efficient nets are also awesome. Unets are a solid choice for segmentation. FasterRCNN, yolos — for detection. MaskRCNN for instance segmentation (but usually you want a separate classification part).

A lot of nice Github repos on models mentioned above. Just google it, and find the one you like (or the one that fits in the current situation). For example, I enjoy this pytorch segmentation repo. Or this efficient-net package.

Resources — first of all, ml in reddit. All cool stuff ends up being posted there anyway, so this is a must-read resource. Then, Twitter (sorry). I am subscribed to guys like mentioned earlier Andrej Karpathy. Find the ones you respect and believe in and follow them. You can also subscribe to official Pytorch/Tensorflow/You-name-it accounts, they announce and retweet a lot of cool stuff as well.

Best courses I’ve seen: from fast.ai (good DL for coders + SOTA algorithms discussed), Full Stack Deep Learning — just the best practical course I’ve seen. Basics of conv nets from Stanford — classic.

Kaggle is the perfect way to follow on development of the best tricks and techniques (crazy augmentations like mix up, cutmix, tricky loss functions, multi-head networks etc.). The creativeness of sportsmen (and people there are real sportsmen imho) never stops to surprise me. Plus there are usually winning solution blog post, so keep an eye on them.

Some words about GPUs

Miners blow the market and GPUs costs like spaceship now. But anyway, there are different options you can use, either you buy your own GPUs or borrow them in the cloud. It is relatively easy to come up with some AWS or Google cloud solutions. Also, in my experience, for most of the tasks, a few 10**/20** are already a solid choice at the beginning. Of course, that depends on the task and data, but most likely you can survive with smaller scales for a while.

Hope I didn’t forget anything important!

I wish that could help someone in this crazy world of computer vision.

Good luck!